Some non-network related thoughts in which I reflect on the self-inflicted social media disaster that began with a blog post about a new Raspberry Pi staff team hire.

Continue readingVideo doorbell

Not really a huge fan of these things, but after missing a few deliveries it’s really a must. So I’ve got one – a Lorex 2k QHD Wired Video Doorbell.

Don’t let that word “Wired” fool you, this is a Wi-Fi device. It takes power from an existing doorbell transformer and runs on 16-24V AC.

It’s part of the Lorex Fusion Collection so there’s a network NVR and a range of cameras that work alongside it. I chose this as much for what it isn’t… it isn’t a Ring. It also ought to be easy to install, comes with a chimekit for linking an existing mechanical doorbell chime, doesn’t look too bad, records locally onto SD card and you can stream the video from it with accessible RTSP feeds:

Main stream: rtsp://ip/cam/realmonitor?channel=1&subtype=0

Sub stream: rtsp://ip/cam/realmonitor?channel=1&subtype=1

Despite taking care to check the Wi-Fi performance in the installation location I made a major error and didn’t test with the door closed. It seems my front door presents significant attenuation as does seemingly every wall in my house. This seems to be a particular feature of many new build houses, with the foil backed insulation and plasterboard. I also suspect the Wi-Fi radio/antenna in the doorbell isn’t great.

One update, post this video being completed, is the notification issue. If notifications are disabled for the device you will still be notified if someone presses the doorbell. This solves the notification fatigue issue I referenced, it just isn’t at all clear in the app this is how it works.

Big, fat, bloaty channels

Dip your toes into the world of enterprise Wi-Fi and the manta is “only use 20MHz wide channels” yet this is not the default for most vendors, and then you might notice pretty much every ISP router supplied to domestic customers (at least in the UK) is using 80MHz channels…. so what gives and when are these big bloated wide channels a good idea?

Perhaps the first thing to understand is what this even means. We’re talking about the 5GHz band ranging from 5150MHz to 5850MHz. For Wi-Fi this is divided up into 20MHz channels, although not all of this spectrum is available in all countries. In the UK most enterprise Wi-Fi vendors offer 24 channels for indoor use. A 40MHz channel is simply two neighbouring 20MHz channels taped together. (more information can be found in Nigel Bowden’s whitepaper)

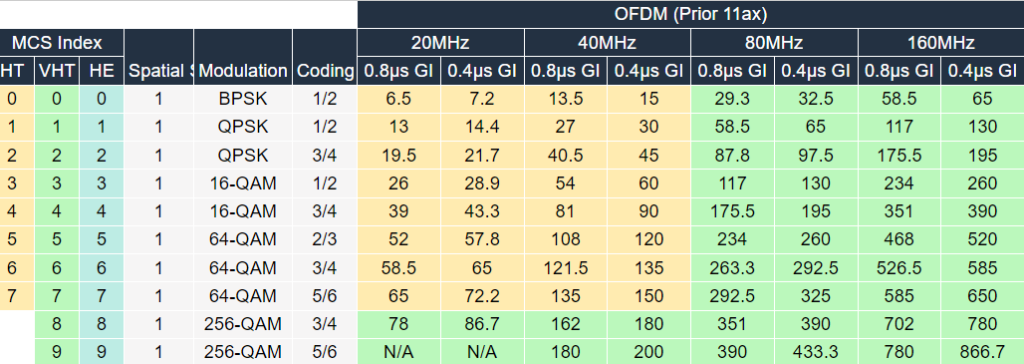

Wi-Fi speed depends on a lot of variables but chiefly it comes down to the Modulation & Coding Scheme (MCS), the number of spatial streams supported by the client and Access Point (two spatial streams is twice the speed of one, for example) and the channel width being used. A 40MHz channel has double the throughput capacity of a 20MHz channel (actually it’s ever so slightly more than double, but let’s keep it simple) and 80MHz can double that again.

Back to ISPs. BT currently recommend I take up their full fibre service offering 150Mbps download speed. I’m going to expect to see that when I run a speedtest from my iPhone. So what does that mean for the Wi-Fi?

The first thing to identify is that my client, the iPhone XS Max, supports Wi-Fi5 (802.11ac) with one spatial stream. So if we take a look at the MCS table (we’re interested in the VHT column) the fastest speed we can achieve is 86.7Mbps for a 20MHz channel. Importantly this is the raw link speed, various overheads mean you’re not going to see that from your speedtest application. What’s more this is the best we can do in ideal circumstances. If my Wi-Fi router is a room or two away it’s unlikely the link will reliably achieve that MCS Index of 8.

So why does the BT router use 80MHz channels when it looks like a 40MHz channel should let us reach our 150Mbps line rate?

Two reasons. Firstly BT sell services with a faster line rate of around 500Mbps and remember these highest speeds are in optimum conditions. So by using an 80MHz channel, we’ve got up to 433.3Mbps of Wi-Fi capacity for our single stream client which increases the chances of hitting a real world 150Mbps throughput around the house.

“So what?” you may ask. Well you don’t get something for nothing, there’s always a trade-off. Remember Wi-Fi only has a finite amount of channel capacity and we need to be deliberate in how that’s used.

For enterprise networks we’re typically less concerned with the maximum throughput a client can achieve versus the aggregate throughput of the whole network. Basically, it’s not about you it’s about us.

Creating good coverage for an office space means multiple access points. We ideally want each of those access points to be on a separate channel or at least to have APs on the same channel to be as far apart as possible. Because using wider channels limits how many you can have, we can reduce the effectiveness of channel reuse in larger networks. That means increased risk of interference between APs, resulting in collisions, lower SNR and ultimately lowering throughput.

This is why a large, busy network running on 80MHz channels can be expected to have lower aggregate throughput than with 40 or 20MHz channels.

There’s also the important matter of noise.

Noise is signal on our channel, picked up by the receiver, that isn’t useful signal we can decode. The key to achieving a high MCS value is a high Signal to Noise Ratio (SNR). For each doubling of the channel width (from 20, to 40, to 80) the noise level is doubled too.

Back to ISPs… again. My hypothetical BT router is running on the same UNI-1 80MHz channel as my neighbours either side. Which means there’s a very high chance of interference. So although BT have chosen this bloater of a channel to improve throughput, it could do the opposite. In most cases you get away with it because our houses provide sufficient attenuation, especially at 5GHz. But densely populated areas, flats for example, it can be the case that neighbouring Wi-Fi networks are really very strong.

Which, finally, brings me to where you can successfully use these wide channels: anywhere you’re not competing for channel space.

So for a small network install in an area that doesn’t have neighbouring networks it can work really well. I’ve tested using 80MHz channels with my home network, simply because I can. The house and my home office have foil backed insulation which does a good job of blocking Wi-Fi. What’s more the ISP supplied routers all tend to use UNii-1 channels – the first four of the band. I’m using Aruba enterprise APs so can select other channels that nobody is using nearby.

And so we reach some sort of conclusion which is, yes, 20MHz channels are still the right way to go for most enterprise deployments. You can use wider channels if you know you have the capacity for them and you’re not ruining your channel re-use plans. At home, if you’re not getting anywhere near the throughput you think you should, you might be suffering from everyone effectively using the same channel. But don’t forget to test with a few different devices, your phone is probably the worse case scenario.

Incrementing a ClearPass Endpoint attribute

This post is based on the SQL query found here. It’s clearly a fairly niche requirement but it’s come in very handy.

Let me set the scene… An open Wi-Fi network is provided for a major public event but is only there for accredited users, not the general public, authenticated with a captive portal. Some members of the public will no doubt try connecting to the network, reach the CP and find they can’t get anywhere.

The problem is every connection consumes resources. If enough people do this there could be DHCP exhaustion and issues with the association table filling up. Assuming everything is sized appropriately it’s likely to be the number of associations that are the primary concern.

There are various answers this configuration, most of which revolve around better security in the first place, but there are good reasons it’s done this way… let’s move on.

MAC caching is being used by ClearPass so the logic says: “do I know this client and if so is the associated user account valid? If so return the happy user role, if not return the portal role”.

This means there are no auth failures – we’re never sending a reject, ClearPass returns the appropriate role.

What we want to do is identify clients that don’t go through captive portal authentication, and therefore just keep being given the portal role.

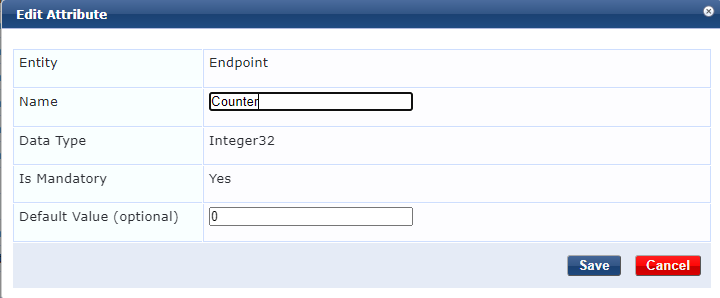

I added an Endpoint attribute of “Counter” (Administration\Dictionary Attributes)

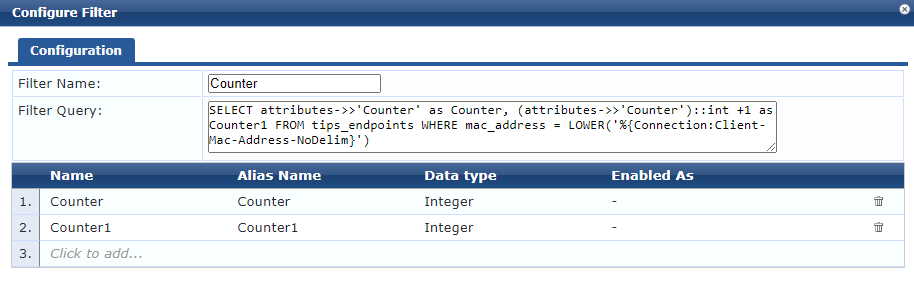

Next a custom filter is added to the Endpoints Repository. This query (courtesy of the wonderful Herman Robers) reads the counter attribute into a variable of “Counter”. It also reads the counter attribute and adds 1 for the variable “Counter1”.

SELECT attributes->>'Counter' as Counter, (attributes->>'Counter')::int +1 as Counter1 FROM tips_endpoints WHERE mac_address = LOWER('%{Connection:Client-Mac-Address-NoDelim}')

Add this as an attributes filter under Authentication\Sources\Endpoints Repository

Within a Dot1X or MAC-auth service you can then call the variable: %{Authorization:[Endpoints Repository]:Counter1}

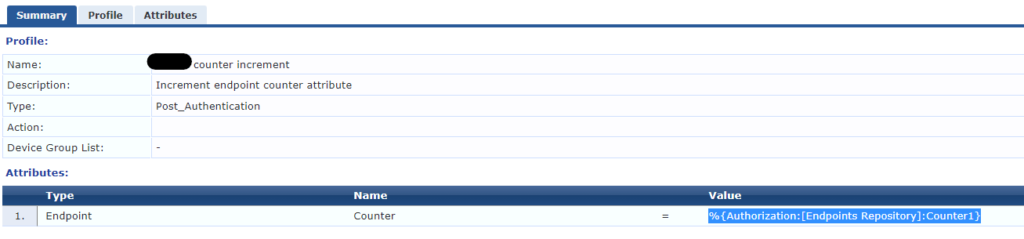

An enforcement profile is created to update the endpoint with the contents of Counter1 and this is applied alongside the portal role.

The result is each time a client hits the portal we also increment the counter number. At a threshold, to be determined by the environment, we start sending a deny. In my testing I set this to something like 5 to prove it worked.

This works well but a persistent client can just keep trying to connect which can still consume some resource as the AP has to generate auth traffic.

In this case the network is using Aruba Instant APs which have a dynamic denylist function. This was set to block clients for one hour after 2 authentication failures.

What happens now is after a client has hit the portal 5 times, ClearPass sends a reject, the client almost immediately tries again and is rejected, at which point it’s added to the denylist and can no longer associate with the network.

There are risks to this approach – it’s easy to see you could end up with false positives being denylisted. Clearly a better overall solution would be to avoid deploying an open network but that opens a whole other can of worms when dealing with a very large number of BYOD users.

On-Prem is cheaper than cloud…

I came across this tweet that got a few people talking:

For a long time the rhetoric I heard at my previous employer was “The cloud is just someone else’s computer”… which is true of course but intrinsic to that comment is that if you have computers of your own why would you need to use someone elses.

That changed at some stage, as fashion does, and where once it was clearly far more sensible to continue to use our own, ‘on-prem’ DCs these suddenly became hugely expensive and the TCO of cloud looked a lot better.

So is on-prem cheaper than cloud? Well… yes and no… like so many things, it depends.

Most often you can spin the figures to suit the case you want to make. For example the cloud spend is all coming from the IT budget whereas the responsibility for something like aircon maintenance for the DC building might sit with the estates department. Costs can be moved around, included or excluded depending on the outcome you want – or maybe how honest you are…

Personally I think the true TCO of on-prem DC stuff ought to accurately reflect how much it costs the organisation rather than how much of it sits in one budget, but that’s just me and my simplistic view of how accounting ought to work.

Also, what’s the space worth and has its cost been written off? A dedicated, fully owned DC building as part of a university campus has a very different value, and therefore potential cost, to DC space within a building in a city. Basically what else could that space resource be used for and is it potentially worth much more filled with people rather than computers.

The size of an organisation makes a huge difference. One developer with a good idea can use AWS or Azure and throw together a level of infrastructure that would need at least 10s of thousands to achieve with tin.

However just as the organisations with ‘legacy’ on-prem DCs are looking at their maintenance & replacement budgets with a heavy heart, those startups that grew as cloud native might well be looking at their monthly cloud fees and sighing just as heavily.

I’m personally a big fan of the hybrid approach. Some workloads work brilliantly in the cloud, others less so…

What that tweet pushes back against is the idea cloud is cheaper because it just is. That patently isn’t true and many have been burned by just how high their CSP bills are.

AOS-Switch (2930) failing to download ClearPass CA certificate

tl;dr – Check the clocks, check you the well-known URL on ClearPass is reachable, check you’ve allowed HTTP access to ClearPass from the switch management subnet.

Another in my series of simple issues that have caught me out, yet don’t seem to have any google hits.

When you implement downloadable user roles from ClearPass with an Aruba switch the switch uses HTTPS to fetch the role passed in the RADIUS attribute.

There are a few things you need in place to make this all work but the overall config isn’t in scope for this post. The key thing I want to focus on, that caught me out recently, is how the switch validates the ClearPass HTTPS certificate.

With AOS-CX switches (e.g. 6300) the certificate can simply be pasted into the config using the following commands:

crypto pki ta-profile <name>

ta-certificate

<paste your cert here>

You don’t need the full trust chain either, if your HTTPS cert was issued by an intermediate CA you only need to provide that cert, though it doesn’t hurt to add the root CA as well.

With AOS-Switch OS based hardware (e.g. 2930f) you can’t paste the cert in, your CLI option is uploading it via TFTP.

Fortunately there’s a much easier way of doing this – an AOS-Switch will automatically download the CA cert from ClearPass using a well-known URL – specifically this one:

http://<clearpass-fqdn>/.well-known/aruba/clearpass/https-root.pem

You have to tell the switch your RADIUS server is ClearPass by adding “clearpass” to the host entry – but I did say I wasn’t going to get into the config.

Recently I had a site where this didn’t work. The switch helpfully logged:

CADownload: ST1-CMDR: Failed to download the certificate from <my clearpass FQDN> server

This leads to:

dca: ST1-CMDR: macAuth client <MAC> on port 1/8 assigned to initial role as downloading failed for user role

and:

ST1-CMDR: Failed to apply user role <rolename> to macAuth client <MAC> on port 1/8: user role is invalid

So what was wrong? In this case it was super simple. The route to ClearPass was via a firewall that wasn’t allowing HTTP access.

Other things to check are clocks – both the switch and ClearPass – always use NTP if you can. Also there have been ClearPass bugs introduced in some versions that break the well-known URL so its worth checking the URL is working. There can also be some confusion between RSA an ECC certificates, which ClearPass now supports. The switch will use RSA.

Wi-Fi Capacity… Just what do you need?

Prompted by Peter Mackenzie’s excellent talk (of course it was) at WLPC 2022 titled “It is Impossible to Calculate Wi-Fi Capacity” I wanted to share some real world experience. I’ll also link to the presentation at the bottom of this page – you should watch it if you haven’t already.

In this talk Peter explores what we mean by capacity planning and amusingly pokes fun at the results of blindly following certain assumptions.

There’s also a look at some fascinating data from Juniper Mist showing real world throughput of all Mist APs within a particular time frame. It’s a huge data set and provides compelling evidence to back up what many of us have long known, namely: you don’t need the capacity you think you do, and the devices/bandwidth per person calculations are usually garbage.

I have walked in to a university library building, full to bursting with students working towards their Easter exams. Every desk is full, beanbags all over the floor in larger rooms to provide the physical capacity for everyone who needs to be in there. Everyone’s on the Wi-Fi (ok not everyone but most people) with a laptop and smartphone/tablet. Tunes being streamed by many.

I can’t remember the overall numbers but over 50 clients on most APs. In these circumstances the average throughput on APs would climb to something like 5Mb.

Similarly a collection of accommodation buildings with about 800 rooms, lots of gaming, netflix and generally high bandwidth stuff going on, the uplinks from the distribution router would almost never trouble a gigabit.

These are averages of course, which is how we tend to look at enterprise networks for capacity planning. We’re interested in trends and, on the distribution side, making sure we’re sitting up and taking notice if we get near 70% link utilization, perhaps lower in many cases.

In fact the wired network is where this gets really interesting. This particular campus at one time had a 1Gb link between two very busy routing switches that spent a lot of it’s time saturated. This had a huge impact on the network performance. This was doubled up (LACP) to a 2Gb link and the problem went away.

Of course this is quite a while ago. Links were upgraded to 10Gb and then 40Gb, but another interesting place to look is the off-site link. As with any campus that has it’s own DCs some of the network traffic is to and from local resources, but the vast majority of Wi-Fi traffic was to and from the Internet. The traffic graph on the internet connection always mirrored the Wi-Fi controllers.

At busy times with 20,000 users on the Wi-Fi, across over 2,500 APs we would see maybe 4-6Gb of traffic.

You will always have examples where users need high performance Wi-Fi and are genuinely moving a lot of data. However the vast majority of users are simply not doing this. Consequently I could walk into the library, associate with an elderly AP that already has 58 clients associated and happily get 50Mb on an internet speed test.

I’ve shared my thoughts before about capacity considerations, which Peter also touches on in his talk. Suffice to say I think Peter is absolutely right in what he says here. With exceptions, such as applications like VoIP with predictable bandwidth requirements, we have a tendency to significantly over-estimate the bandwidth requirements of our networks and the assumptions on which those assessments are made will often be misleading at best.

ClearPass Guest Sponsor Lookup

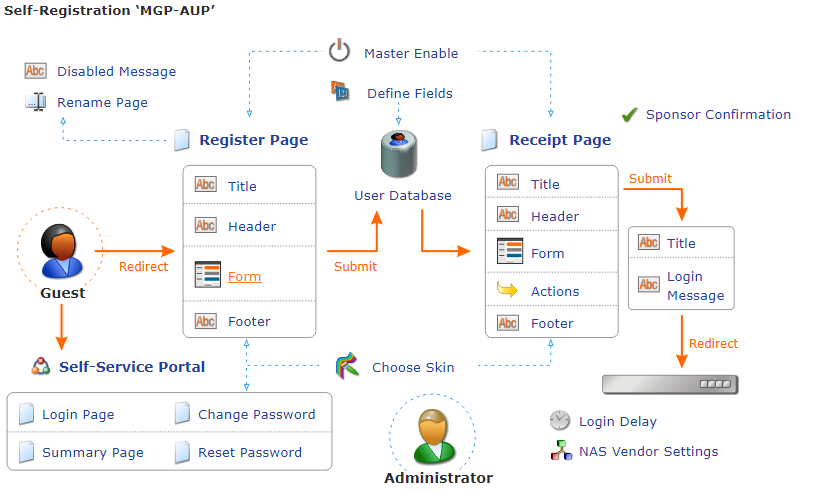

Guest user self-registration is one of my favourite things. It allows users to create their own account without invoking a helpdesk ticket. Sponsorship means an account has to be approved before it becomes active.

Typically e-mail is used to reach the sponsor and tends to be specified as a hidden field in the form, a drop down menu or an LDAP search.

I recently configured this for a customer who wanted to search their on site Active Directory for the sponsor, specifically users within a group of which only other groups were a member. A nested group. Nested groups are very common in organisations but I struggled to find some clear documentation on how to make it work for this particular use case.

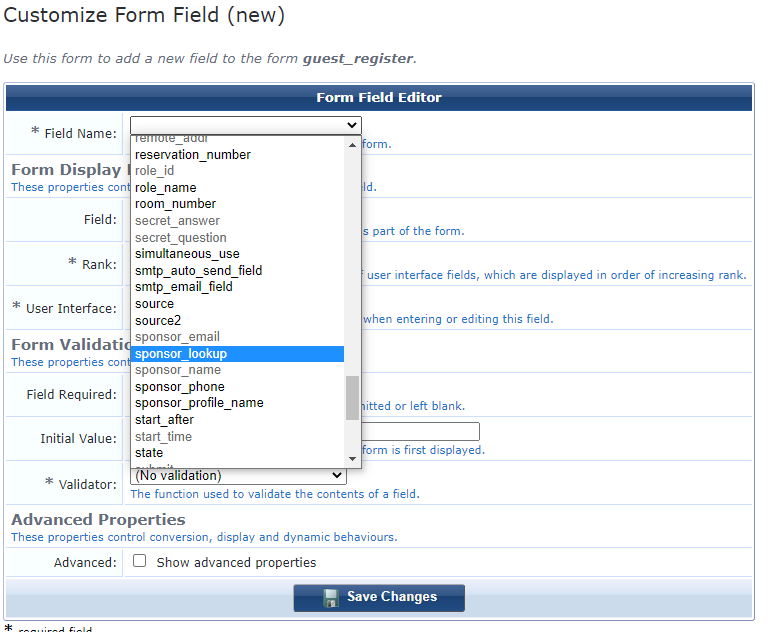

First add the sponsor lookup field to your self-registration form

To do this open the form and in the appropriate place add a field. Then on the form field editor, select sponsor_lookup. You probably want this to be a required field.

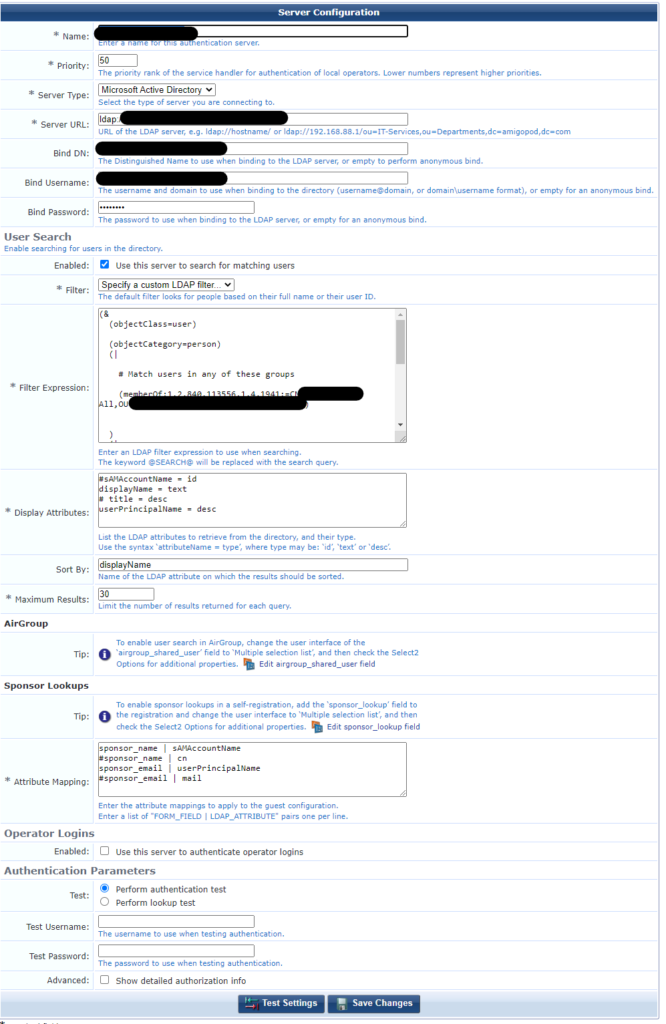

You also need to add the LDAP server to ClearPass Guest.

From Administration > Operator Logins > Servers select “Create new LDAP server”.

If this is an AD server it will be using LDAPs v3. ClearPass Guest automatically uses this version of the protocol when Active Directory is selected as the server type.

Enter the server URL in the format ldap://<servername>/dc=<domain>,dc=<suffix>

The Bind DN and Bind Username will likely be the same <user>@<domain>

At this point, you should be able to perform lookups or searches against the directory. In my case I needed to restrict the search to a distribution group. This uses a LDAP OID 1.2.840.113556.1.4.1941

So, as per the screenshot above, choose a custom LDAP filter. Here’s the filter I’ve used:

(&

(objectClass=user)

(objectCategory=person)

(|

# Match users in any of these groups

(memberOf:1.2.840.113556.1.4.1941:=CN=groupname,OU=ou-name,DC=domain,DC=com)

)

(|

# Match users by any of these criteria

(sAMAccountName=*@SEARCH@*)

(displayName=*@SEARCH@*)

(cn=*@SEARCH@*)

(sn=*@SEARCH@*)

(givenName=*@SEARCH@*)

)

)As the comment suggests you can add more groups to the search. For a primary group that users are members of the format is (memberOf=CN=Wireless,CN=Users,DC=clearpass,DC=aruba,DC=com)

This query worked a treat and meant when searching guests wouldn’t be presented with accounts for admin users or meeting rooms.

Reflecting on 2021

Another flippin’ year goes by with curtailed social interactions, travel and that raised baseline of anxiety but aside from all that, here’s what I’m thinking.

It’s been another tricky year for lots of businesses. Network assets get sweated for a bit longer because everyone’s a little uncertain, so you don’t spend money you don’t need to. For me, that meant the year began with the threat of redundancy. More on that later…

Wi-Fi continues not to be taken quite as seriously as it should be by most enterprises. As Keith Parsons commented in his London Wi-Fi Design Day talk, Wi-Fi doesn’t punish you for doing it wrong. That’s not strictly true as a really badly designed network can fall apart entirely under load, something I’ve seen a few times, but his point stands; namely you can throw a load of APs into a space with very little thought and… it works.

An interesting challenge I’ve encountered has been organisations ignoring the design, doing what they’ve always done, and then complaining they have issues. I’ve also encountered an organisation stating “Wi-Fi is not business critical” when clearly it is because as soon as there are issues it becomes the highest priority. In many circumstances Wi-Fi is the edge everyone uses. Not only is Wi-Fi as business critical as the wired network, in many cases it’s more so. Of course the wired edge supports the wireless edge, we need it all to work.

And on that subject, we continue to see a separation between wired and wireless in many network teams. In my mind this doesn’t make sense a lot of the time and I consider the wireless and wired edge to be the same domain. Plus we all know the wires are easier.

Wi-Fi6E has arrived and will totally solve all our problems… It’s something I’ve yet to play with, but lacking either AP or client that will have to wait. Wi-Fi6 (802.11ax) has arguably so far failed to deliver on the huge efficiency increase promised I think because legacy clients persist, and will do for many years to come, but also because the scheduling critical to OFDMA is probably not where it needs to be.

It was also really disappointing to see Ofcom release such a small amount of 6GHz bandwidth here in the UK.

This has been another year of firsts for me, working with high profile clients, learning new technologies (gaining Checkpoint certifications), embracing project management (I’m a certified Prince2 Practitioner)

It’s also particularly struck me how much my so-called “career” has been held back my ethics and, what could refer to as loyalty but, if I’m honest, is probably comfort. I don’t have a complaint about this, I am who I am, this is just an observation.

I’ve been approached for, and turned down extremely well paid roles working for organisations who’s core purpose is something I cannot support. I’m happy with this. If I’m not working to make the world better, I at least need to feel I’m not actively making it worse.

This is no judgement on anyone who does work in areas I disagree with btw. We all have to make our own decisions about what matters in life.

The loyalty/comfort is a trickier area. I declined a really interesting opportunity that meant moving overseas. Ultimately that was probably the right decision but, on reflection, it was taken too quickly. I work an interesting role for a great company, long may that continue, but when the feet start itching or an opportunity comes my way, I need to be ready to embrace the uncertainty. Starting the year with redundancies, and losing a great member of the team, served as a reminder that loyalty in employment is transactional.

Wi-Fi Design in Higher Ed

I was recently invited to speak at the London Wi-Fi Design Day hosted by Ekahau and Open Reality. It was a fantastic day, great to catch up with people in person and some excellent talks (if I say so myself).

You can watch all the talks, from this and previous years, on this playlist. Talks from London 2021 start at number 41 as the list is ordered today.

My talk, some thoughts on Wi-Fi Design in Higher Education can be watched below.

I was particularly challenged by Peter Mackenzie’s talk on troubleshooting and the idea we all tend to jump to answers before we’ve asked enough questions. Hugely helpful and highly recommended.