If you know any enterprise networking you’ll have come across AAA – Authentication, Authorization and Accounting. The cornerstone of network security that is ensuring the client can be authenticated, so not just anyone can connect – and often that first A is as far as it goes.

So what of Authorization?

This is what provides a way of making your Wi-Fi more efficient. If you have corporate devices, BYOD, IoT and they currently have three separate SSIDs (not uncommon) you can put all three onto the same SSID, reducing management traffic, and use the Authorization part of AAA to determine what network access each client should have.

This might use Active Directory group membership to determine which VLAN a user gets dropped into, or what ACLs are applied. In many systems both these are part of the Role Based Access Control is the term used for this.

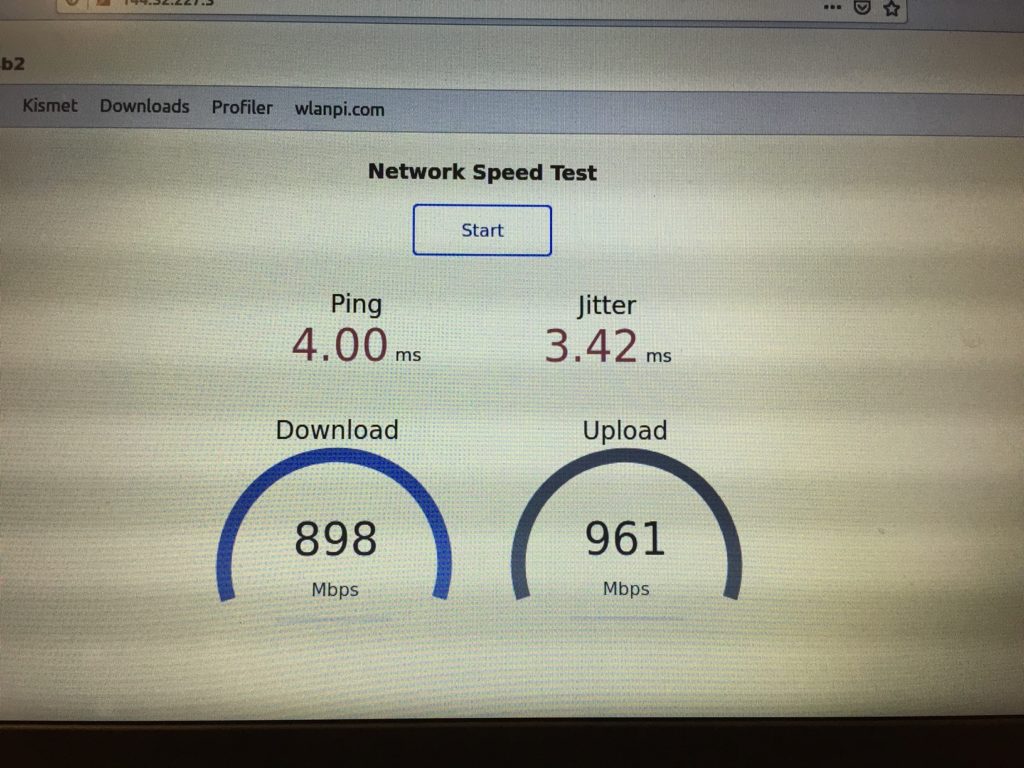

Accounting is where you see what the user actually did. In practice this usually takes the form of when they connected to the network, how long for and how much data they transferred.

Here’s how it comes together in an example recent proof of concept for a customer:

Multiple departments are in a building and it’s necessary to provide security, keeping traffic from each dept separate. For regulatory purposes it’s necessary to assign network services costs to departments but this has to be based on real world information such as bandwidth use. Finally “we’re all one company so we don’t want to setup separate networks”.

Most networks have pretty much everything in place to do this, it’s just a question of whether all the dots are joined.

A RADIUS server (Aruba Clearpass in this case but something like Cisco ISE could be used) is already used for 802.1X authentication. Users from different AD groups can be assigned different roles, placing them in their department’s VLAN or simply applying ACLs specifying the access the client should have. The APs or controller are configured to return RADIUS accounting which allows the administrator to accurately determine the data traffic used for each connection.

Everything needed to do this has been around for quite a long time, but an awful lot of networks out there still have one SSID per VLAN, an SSID for each client type, and SSID for each day of the week. There are much better ways to do this.